Analysing visual content using HoloLens, Computer Vision APIs, Unity and the Mixed Reality Toolkit

- 15 minsIn these days, I’m exploring the combination of HoloLens/Windows Mixed Reality and the capabilities offered by Cognitive Services to analyse and extract information from images captured via the device camera and processed using the Computer Vision APIs and the intelligent cloud. In this article, we’ll explore the steps I followed for creating a Unity application running on HoloLens and communicating with the Microsoft AI platform.

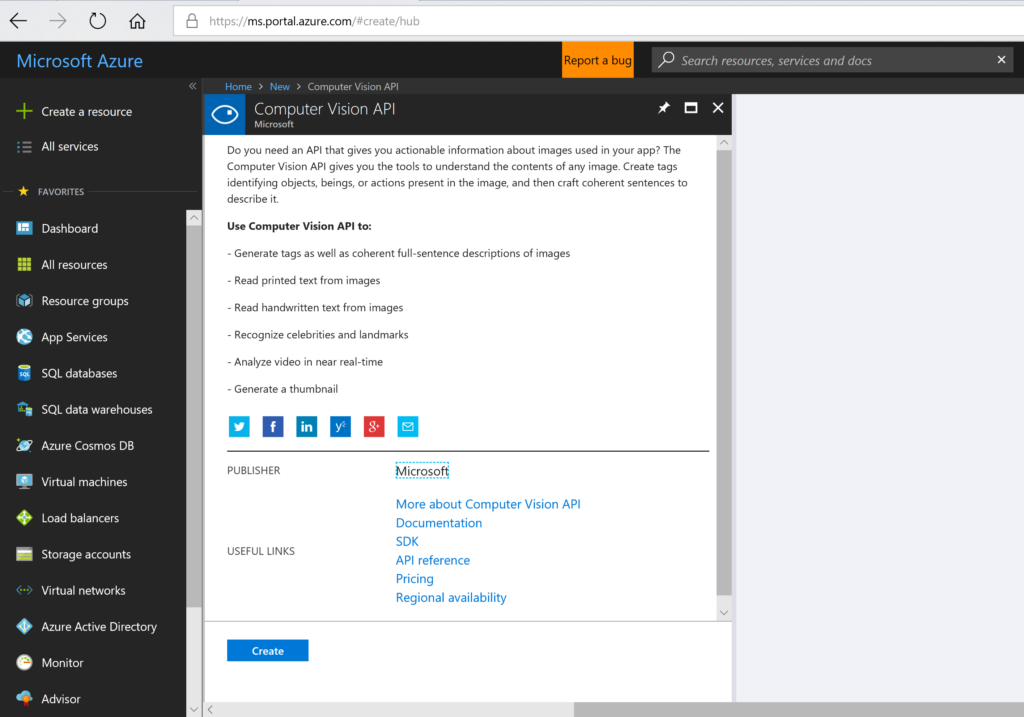

Registering for Computer Vision APIs

The first step was to navigate to the Azure portal https://portal.azure.com and create a new Computer Vision API resource:

I noted down the Keys and Endpoint and started investigating how to approach the code for capturing images on HoloLens and sending them to the intelligent cloud for processing.

Before creating the Unity experience, I decided to start with a simple UWP app for analysing images.

Writing the UWP test app and the shared library

There are already some samples available for Cognitive Services APIs, so I decided to reuse some code available and described in this article here supplemented by some camera capture UI in UWP.

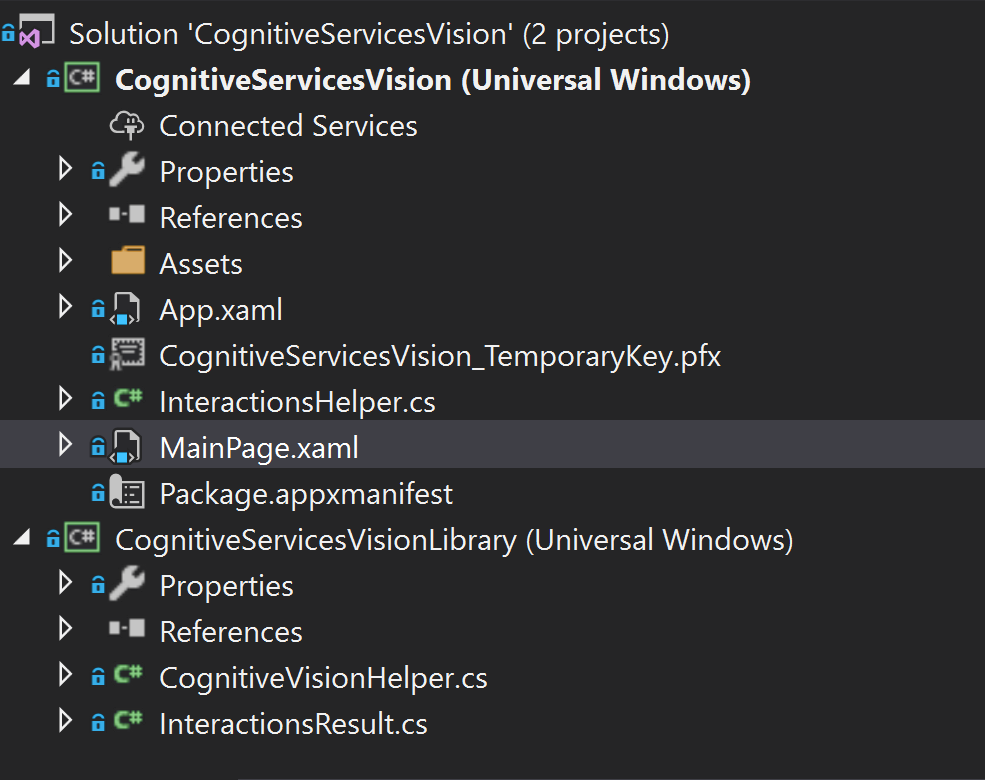

I created a new Universal Windows app and library (CognitiveServicesVisionLibrary) to provide, respectively, a test UI and some reusable code that could be referenced later by the HoloLens experience.

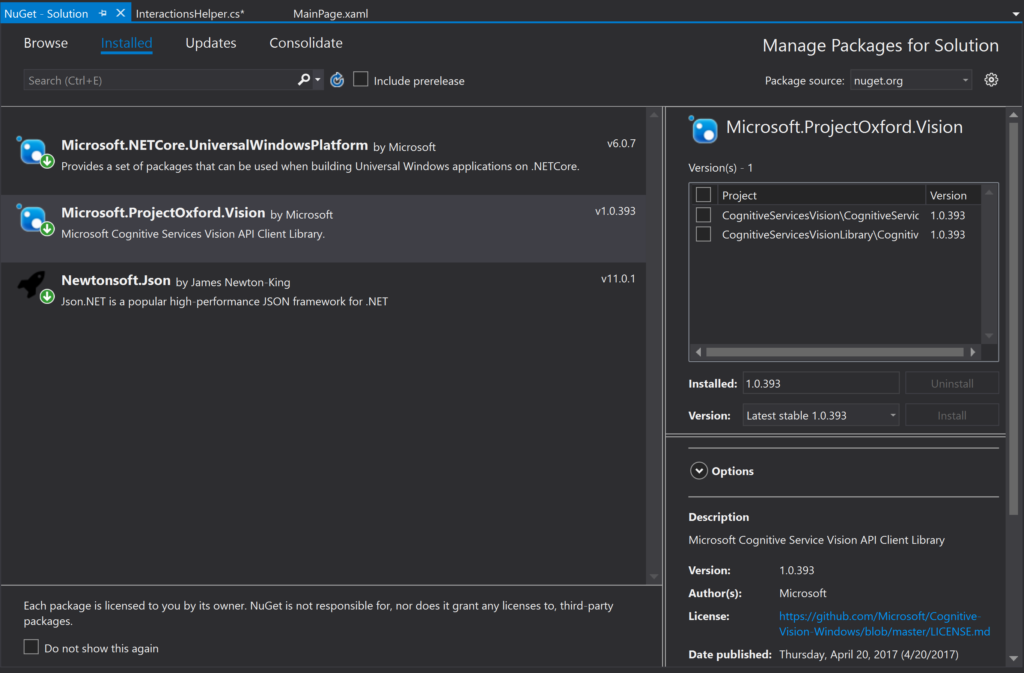

The Computer Vision APIs can be accessed via the package Microsoft.ProjectOxford.Vision available on NuGet so I added a reference to both projects:

The test UI contains an image and two buttons: one for selecting a file using a FileOpenPicker and another for capturing a new image using the CameraCaptureUI. I decided to wrap these two actions in an InteractionsHelper class:

public class InteractionsHelper

{

CognitiveVisionHelper _visionHelper;

public InteractionsHelper()

{

_visionHelper = new CognitiveVisionHelper();

}

public async Task<InteractionsResult> RecognizeUsingCamera()

{

CameraCaptureUI captureUI = new CameraCaptureUI();

captureUI.PhotoSettings.Format = CameraCaptureUIPhotoFormat.Jpeg;

StorageFile file = await captureUI.CaptureFileAsync(CameraCaptureUIMode.Photo);

return await BuildResult(file);

}

public async Task<InteractionsResult> RecognizeFromFile()

{

var openPicker = new FileOpenPicker

{

ViewMode = PickerViewMode.Thumbnail,

SuggestedStartLocation = PickerLocationId.PicturesLibrary

};

openPicker.FileTypeFilter.Add(".jpg");

openPicker.FileTypeFilter.Add(".jpeg");

openPicker.FileTypeFilter.Add(".png");

openPicker.FileTypeFilter.Add(".gif");

openPicker.FileTypeFilter.Add(".bmp");

var file = await openPicker.PickSingleFileAsync();

return await BuildResult(file);

}

private async Task<InteractionsResult> BuildResult(StorageFile file)

{

InteractionsResult result = null;

if (file != null)

{

var bitmap = await _visionHelper.ConvertImage(file);

var results = await _visionHelper.AnalyzeImage(file);

var output = _visionHelper.ExtractOutput(results);

result = new InteractionsResult {

Description = output,

Image = bitmap,

AnalysisResult = results

};

}

return result;

}

}I then worked on the shared library creating a helper class for processing the image using the Vision APIs available in Microsoft.ProjectOxford.Vision and parsing the result.

Tip: after creating the VisionServiceClient, I received an unauthorised error when specifying only the key: the error disappeared by also specifying the endpoint URL available in the Azure portal.

using Microsoft.ProjectOxford.Vision;

using Microsoft.ProjectOxford.Vision.Contract;

using System;

using System.IO;

using System.Threading.Tasks;

using Windows.Storage;

using Windows.Storage.Streams;

using Windows.UI.Xaml.Media;

using Windows.UI.Xaml.Media.Imaging;

public class CognitiveVisionHelper

{

string _subscriptionKey;

string _apiRoot;

public CognitiveVisionHelper()

{

_subscriptionKey = "SubKey";

_apiRoot = "ApiRoot";

}

private VisionServiceClient GetVisionServiceClient()

{

return new VisionServiceClient(_subscriptionKey, _apiRoot);

}

public async Task<ImageSource> ConvertImage(StorageFile file)

{

var bitmapImage = new BitmapImage();

FileRandomAccessStream stream = (FileRandomAccessStream)await file.OpenAsync(FileAccessMode.Read);

bitmapImage.SetSource(stream);

return bitmapImage;

}

public async Task<AnalysisResult> AnalyzeImage(StorageFile file)

{

var VisionServiceClient = GetVisionServiceClient();

using (Stream imageFileStream = await file.OpenStreamForReadAsync())

{

// Analyze the image for all visual features

VisualFeature[] visualFeatures = new VisualFeature[] { VisualFeature.Adult, VisualFeature.Categories

, VisualFeature.Color, VisualFeature.Description, VisualFeature.Faces, VisualFeature.ImageType

, VisualFeature.Tags };

AnalysisResult analysisResult = await VisionServiceClient.AnalyzeImageAsync(imageFileStream, visualFeatures);

return analysisResult;

}

}

public async Task<OcrResults> AnalyzeImageForText(StorageFile file, string language)

{

var VisionServiceClient = GetVisionServiceClient();

using (Stream imageFileStream = await file.OpenStreamForReadAsync())

{

OcrResults ocrResult = await VisionServiceClient.RecognizeTextAsync(imageFileStream, language);

return ocrResult;

}

}

public string ExtractOutput(AnalysisResult analysisResult)

{

return analysisResult.Description.Captions[0].Text;

}

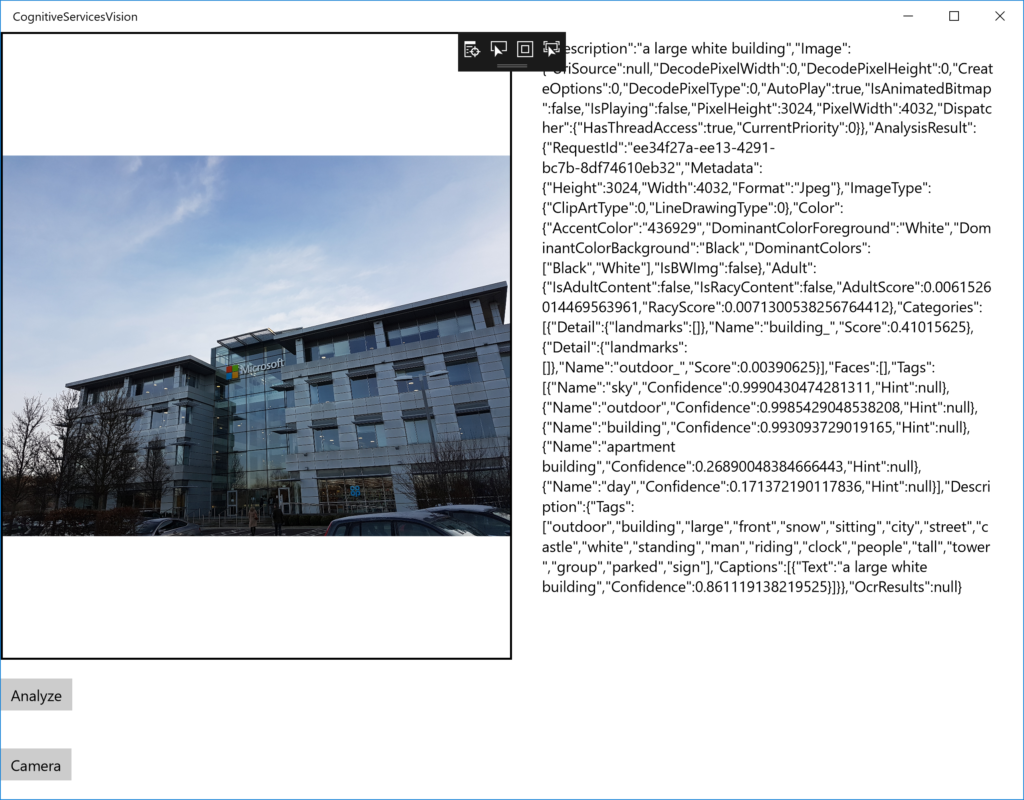

}I then launched the test UI, and the image was successfully analysed, and the results returned from the Computer Vision APIs, in this case identifying a building and several other tags like outdoor, city, park: great!

I also added a Speech Synthesizer playing the general description returned by the Cognitive Services call:

private async void Speak(string Text)

{

MediaElement mediaElement = this.media;

var synth = new SpeechSynthesizer();

SpeechSynthesisStream stream = await synth.SynthesizeTextToStreamAsync(Text);

mediaElement.SetSource(stream, stream.ContentType);

mediaElement.Play();

}I then moved to HoloLens and started creating the interface using Unity, the Mixed Reality Toolkit and UWP.

Creating the Unity HoloLens experience

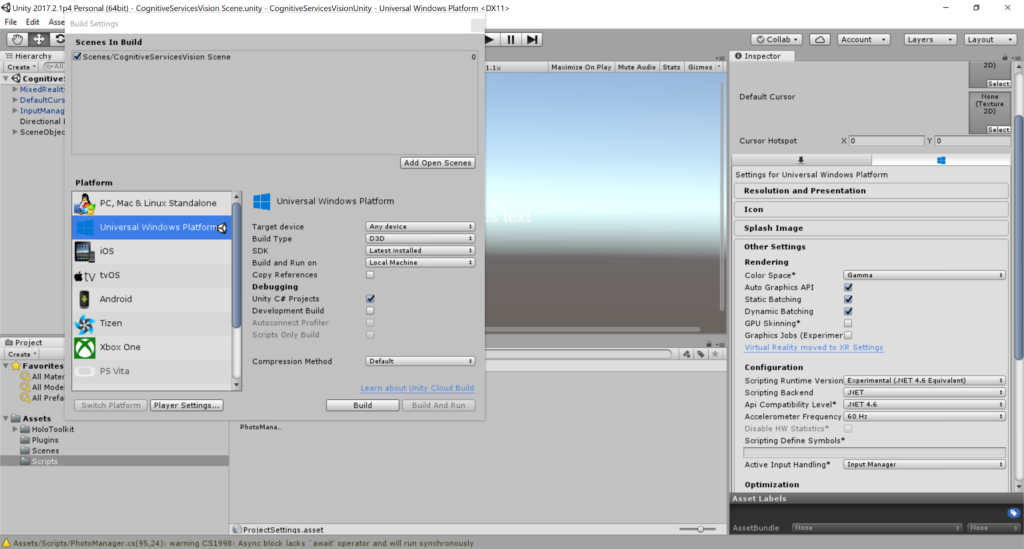

First of all, I created a new Unity project using Unity 2017.2.1p4 and then added a new folder named Scenes and saved the active scene as CognitiveServicesVision Scene.

I downloaded the corresponding version of the Mixed Reality Toolkit from the releases section of the GitHub project https://github.com/Microsoft/MixedRealityToolkit-Unity/releases and imported the toolkit package HoloToolkit-Unity-2017.2.1.1.unitypackage using the menu Assets->Import Package->Custom package.

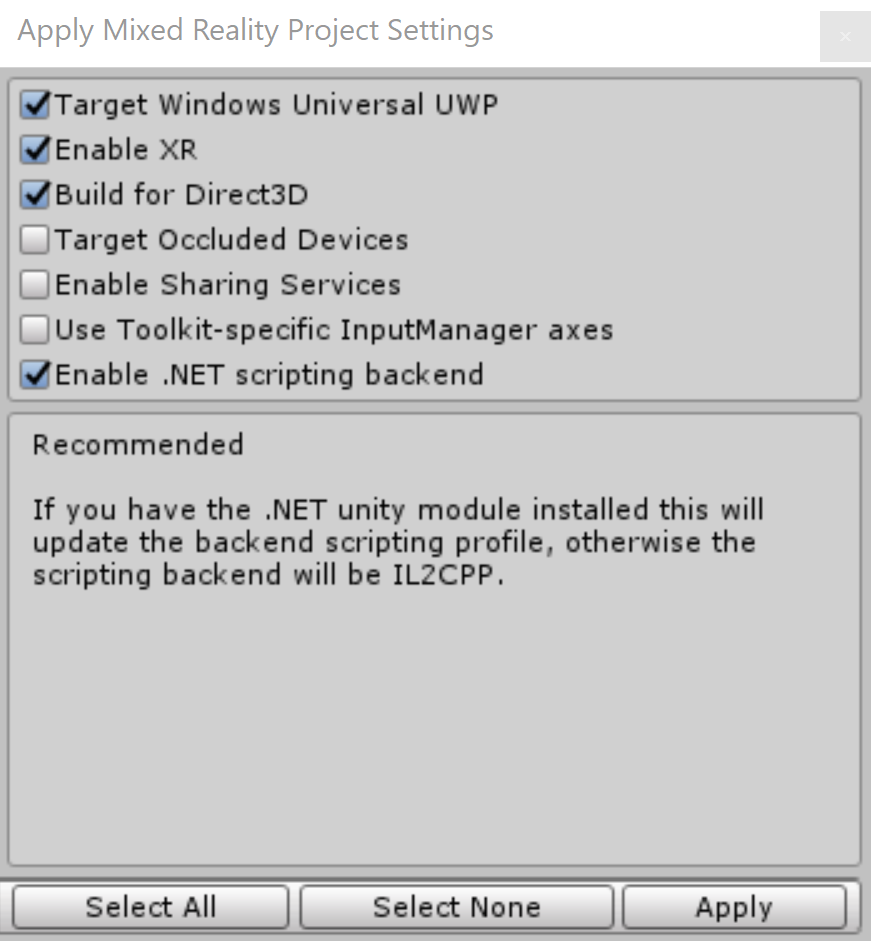

Then, I applied the Mixed Reality Project settings using the corresponding item in the toolkit menu:

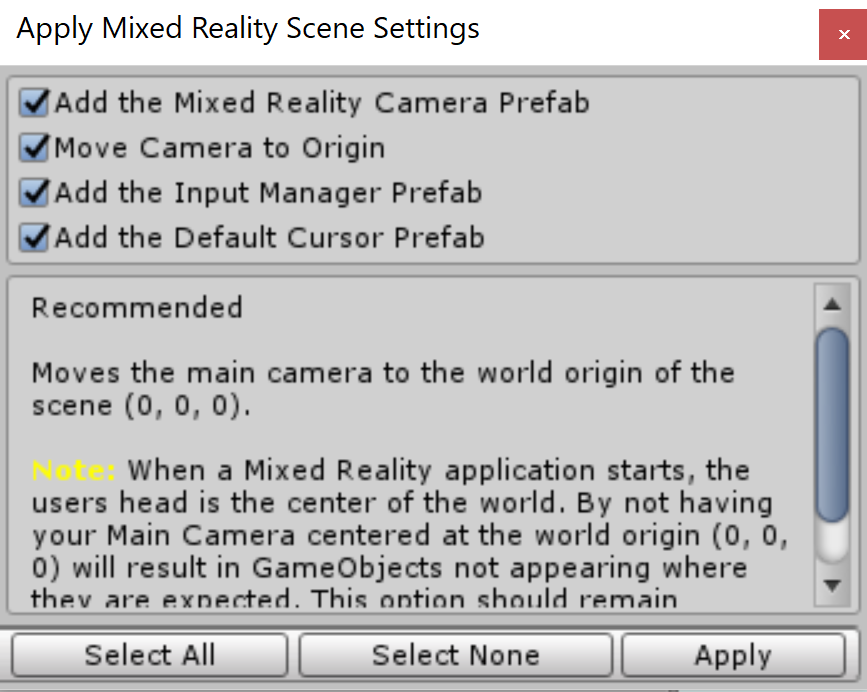

And selected the Scene Settings adding the Camera, Input Manager and Default Cursor prefabs:

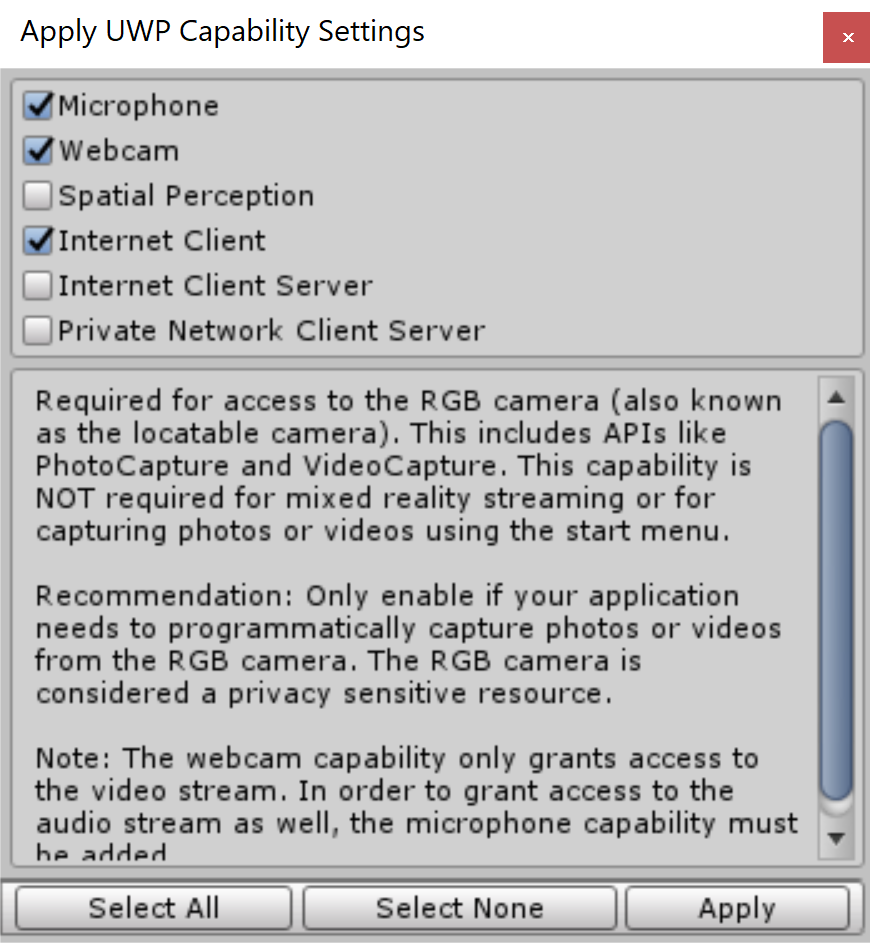

And finally set the UWP capabilities as I needed access to the camera for retrieving the image, the microphone for speech recognition and internet client for communicating with Cognitive Services:

I was then ready to add the logic to retrieve the image from the camera, save it to the HoloLens device and then call the Computer Vision APIs.

Creating the Unity Script

The CameraCaptureUI UWP API is not available in HoloLens, so I had to research a way to capture an image from Unity, save it to the device and then convert it to a StorageFile ready to be used by the CognitiveServicesVisionLibrary implemented as part of the previous project.

First of all, I enabled the Experimental (.NET 4.6 Equivalent) Scripting Runtime version in the Unity player for using features like async/await. Then, I enabled the PicturesLibrary capability in the Publishing Settings to save the captured image to the device.

Then, I created a Scripts folder and added a new PhotoManager.cs script taking as a starting point the implementation available in this GitHub project.

The script can be attached to a TextMesh component visualising the status:

using HoloToolkit.Unity;

using System;

using System.Linq;

using UnityEngine;

using UnityEngine.XR.WSA.WebCam;

#if UNITY_UWP

using Windows.Storage;

#endif

public class PhotoManager : MonoBehaviour

{

private TextMesh _statusText;

private PhotoCapture _capture;

private TextToSpeech _textToSpeechComponent;

private bool _isCameraReady = false;

private string _currentImagePath;

private string _pictureFolderPath;

#if UNITY_UWP

private CognitiveServicesVisionLibrary.CognitiveVisionHelper _cognitiveHelper;

#endif

private void Start()

{

_statusText = GetComponent<TextMesh>();

_textToSpeechComponent = GetComponent<TextToSpeech>();

#if UNITY_UWP

_cognitiveHelper = new CognitiveServicesVisionLibrary.CognitiveVisionHelper();

#endif

StartCamera();

}

#if UNITY_UWP

private async void getPicturesFolderAsync() {

StorageLibrary picturesStorage = await StorageLibrary.GetLibraryAsync(KnownLibraryId.Pictures);

_pictureFolderPath = picturesStorage.SaveFolder.Path;

}

#endifInitialising the PhotoCapture API available in Unity https://docs.unity3d.com/Manual/windowsholographic-photocapture.html

public void StartCamera()

{

PhotoCapture.CreateAsync(true, OnPhotoCaptureCreated);

#if UNITY_UWP

getPicturesFolderAsync();

#endif

}

private void OnPhotoCaptureCreated(PhotoCapture captureObject)

{

_capture = captureObject;

Resolution resolution = PhotoCapture.SupportedResolutions.OrderByDescending(res => res.width * res.height).First();

var camera = new CameraParameters(WebCamMode.PhotoMode)

{

hologramOpacity = 1.0f,

cameraResolutionWidth = resolution.width,

cameraResolutionHeight = resolution.height,

pixelFormat = CapturePixelFormat.BGRA32

};

_capture.StartPhotoModeAsync(camera, OnPhotoModeStarted);

}

private void OnPhotoModeStarted(PhotoCapture.PhotoCaptureResult result)

{

_isCameraReady = result.success;

SetStatus("Camera ready. Say 'Describe' to start");

}

And then capturing an image using the TakePhoto() public method:

public void TakePhoto()

{

if (_isCameraReady)

{

var fileName = string.Format(@"Image_{0:yyyy-MM-dd_hh-mm-ss-tt}.jpg", DateTime.Now);

_currentImagePath = Application.persistentDataPath + "/" + fileName;

_capture.TakePhotoAsync(_currentImagePath, PhotoCaptureFileOutputFormat.JPG, OnCapturedPhotoToDisk);

}

else

{

SetStatus("The camera is not yet ready.");

}

}Saving the photo to the pictures library folder and then passing it to the library created in the previous section:

private async void OnCapturedPhotoToDisk(PhotoCapture.PhotoCaptureResult result)

{

if (result.success)

{

#if UNITY_UWP

try

{

if(_pictureFolderPath != null)

{

var newFile = System.IO.Path.Combine(_pictureFolderPath, "Camera Roll",

System.IO.Path.GetFileName(_currentImagePath));

if (System.IO.File.Exists(newFile))

{

System.IO.File.Delete(newFile);

}

System.IO.File.Move(_currentImagePath, newFile);

var storageFile = await StorageFile.GetFileFromPathAsync(newFile);

SetStatus("Analysing picture...");

var visionResult = await _cognitiveHelper.AnalyzeImage(storageFile);

var description = _cognitiveHelper.ExtractOutput(visionResult);

SetStatus(description);

}

}

catch(Exception e)

{

SetStatus("Error processing image");

}

#endif

}

else

{

SetStatus("Failed to save photo");

}

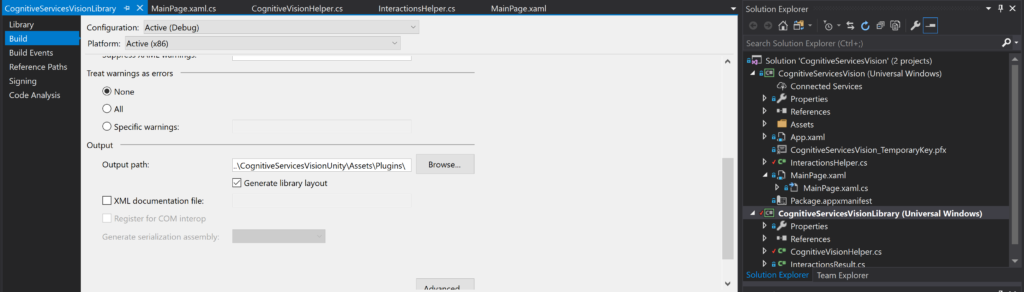

}The code references the CognitiveServicesVisionLibrary UWP library created previously: to use it from Unity, I created a new Plugins folder in my project and ensured that the Build output of the Visual Studio library project was copied to this folder:

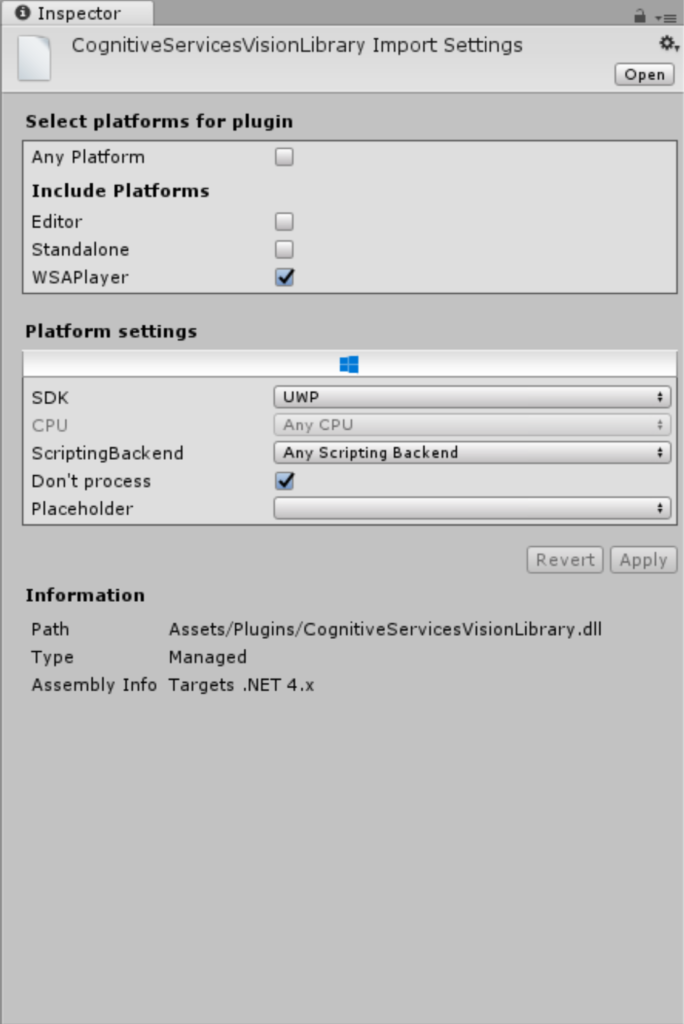

And then set the import settings in Unity for the custom library:

And then set the import settings in Unity for the custom library:

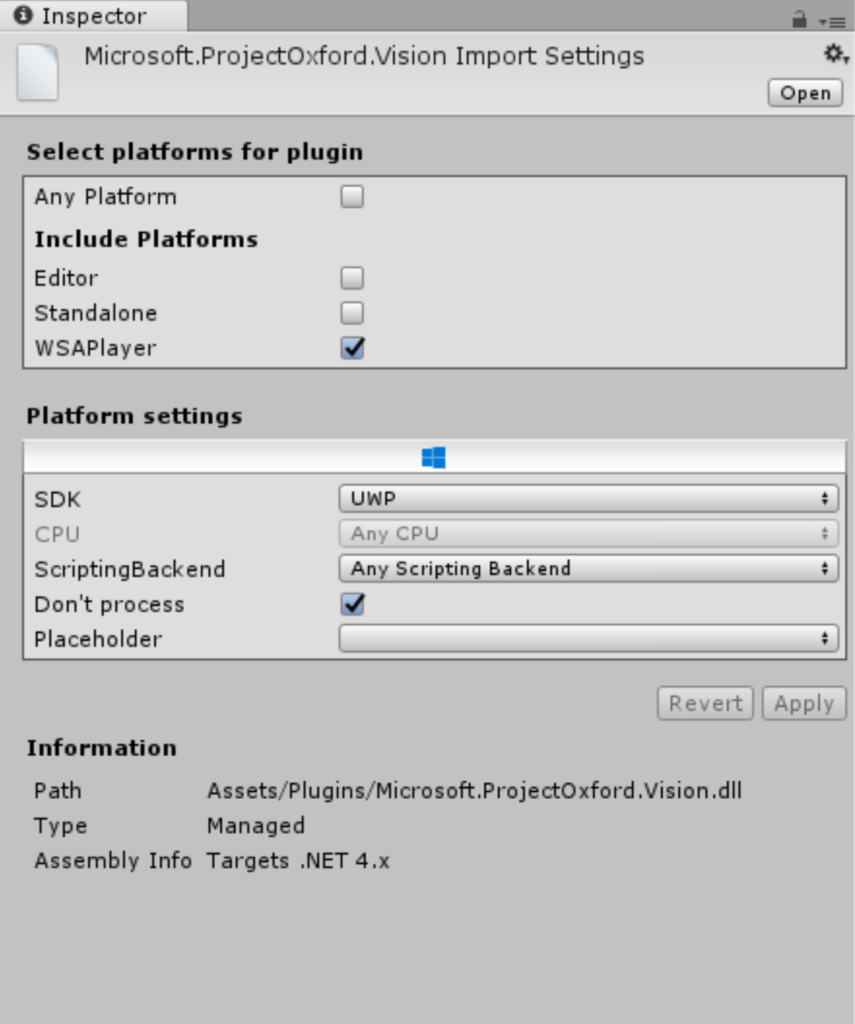

And for the NuGet library too:

Nearly there! Let’s see now how I enabled Speech recognition and Tagalong/Billboard using the Mixed Reality Toolkit.

Enabling Speech

I decided to implement a very minimal UI for this project, using the speech capabilities available in HoloLens for all the interactions.

In this way, a user can just simply say the work Describe to trigger the image acquisition and the processing using the Computer Vision API, and then naturally listening to the results.

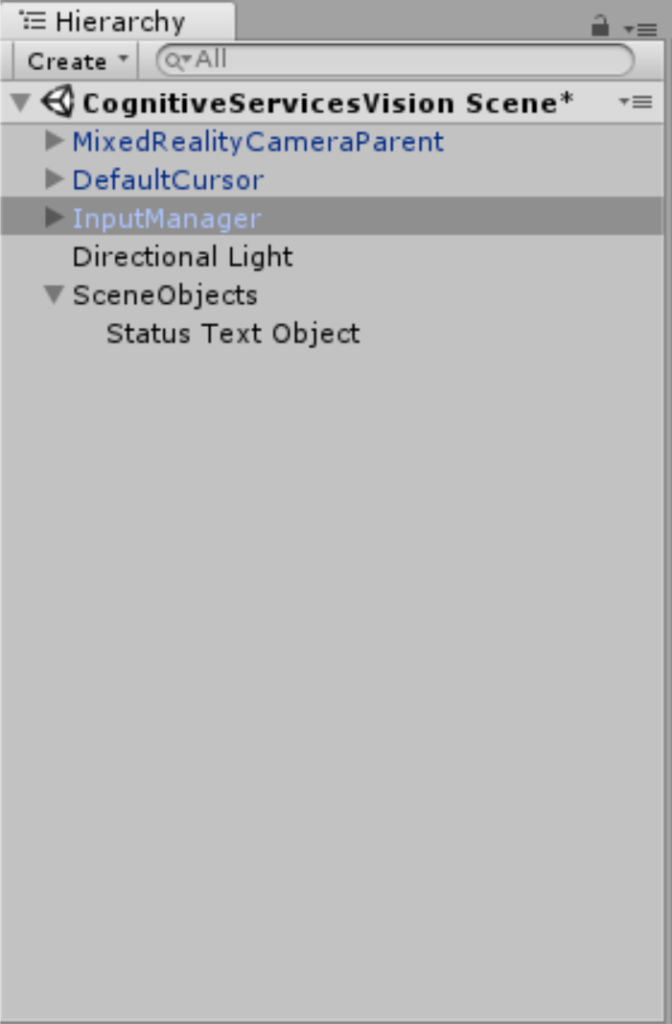

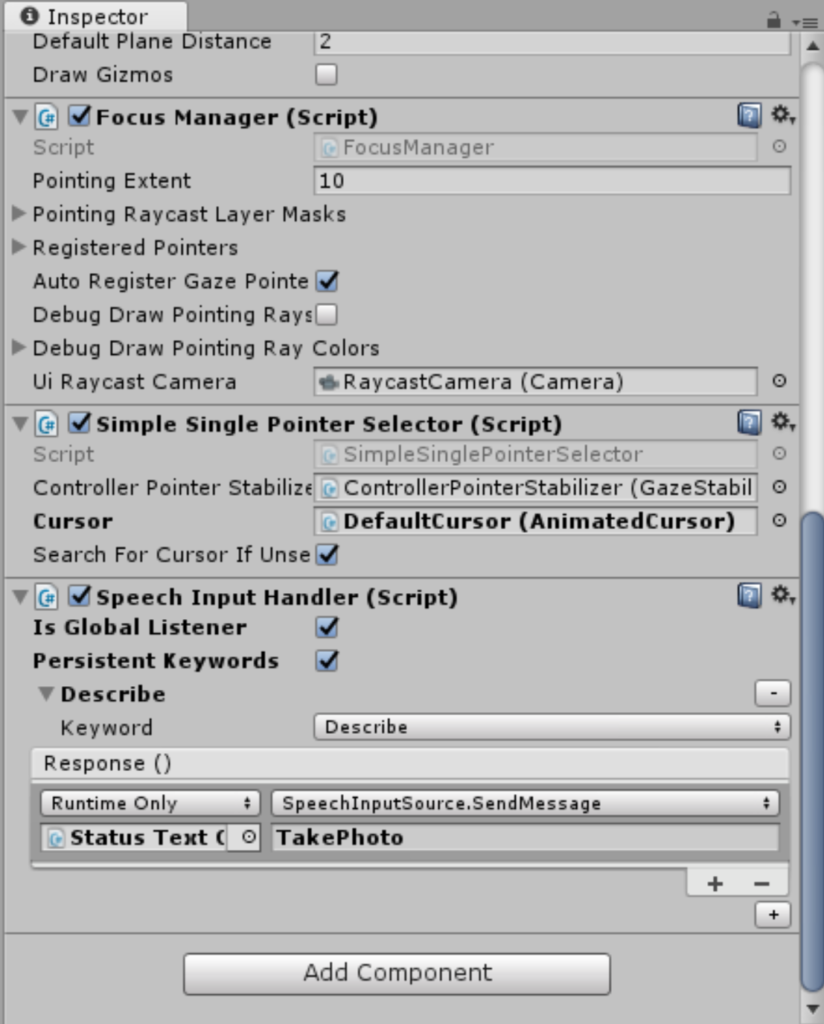

In the Unity project, I selected the InputManager object:

And added a new Speech Input Handler Component to it:

Then, I mapped the keyword Describe with the TakePhoto() method available in the PhotoManager.cs script already attached to the TextMesh that I previously named as Status Text Object.

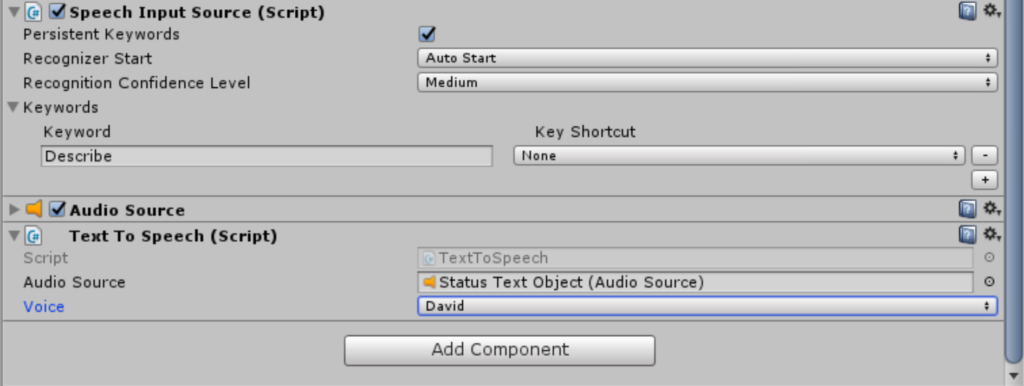

The last step required to enable Text to Speech for receiving the output: I simply added a Text to Speech component to my TextMesh:

And enabled the speech in the script using StartSpeaking():

…

_textToSpeechComponent = GetComponent<TextToSpeech>();

…

private void SetStatus(string statusText)

{

_statusText.text = statusText;

Speak(statusText);

}

private void Speak(string description)

{

_textToSpeechComponent.StartSpeaking(description);

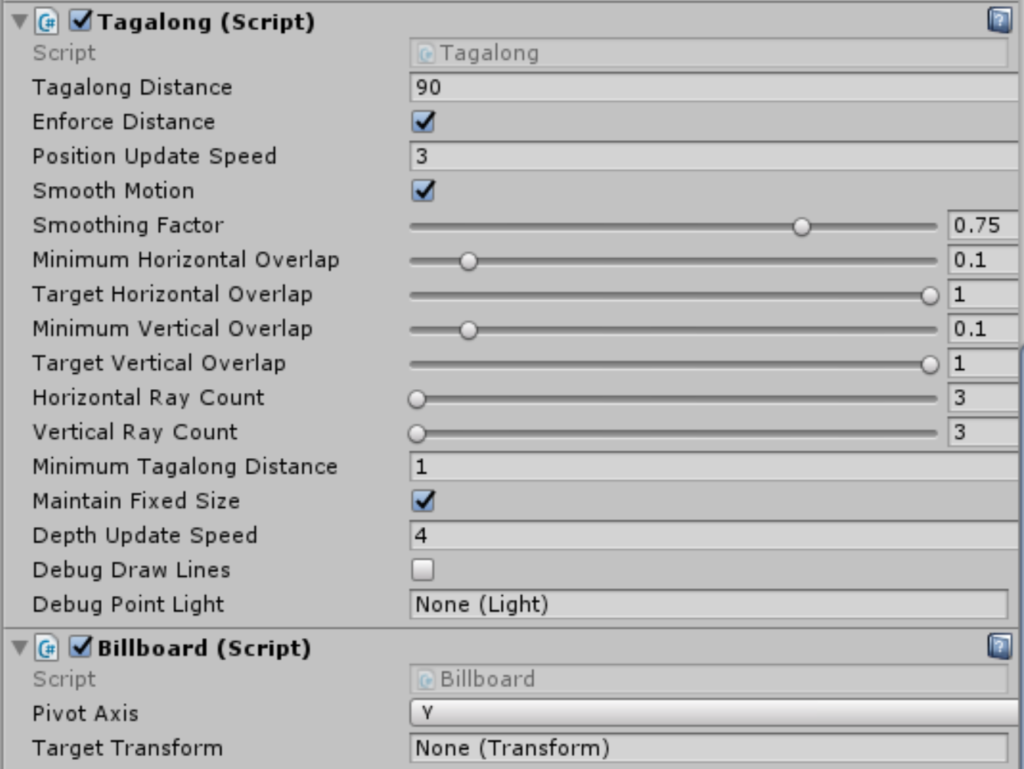

}I also added other two components available in the Mixed Reality Toolkit: Tagalong and Billboard to have the status text following me and not anchored to a specific location:

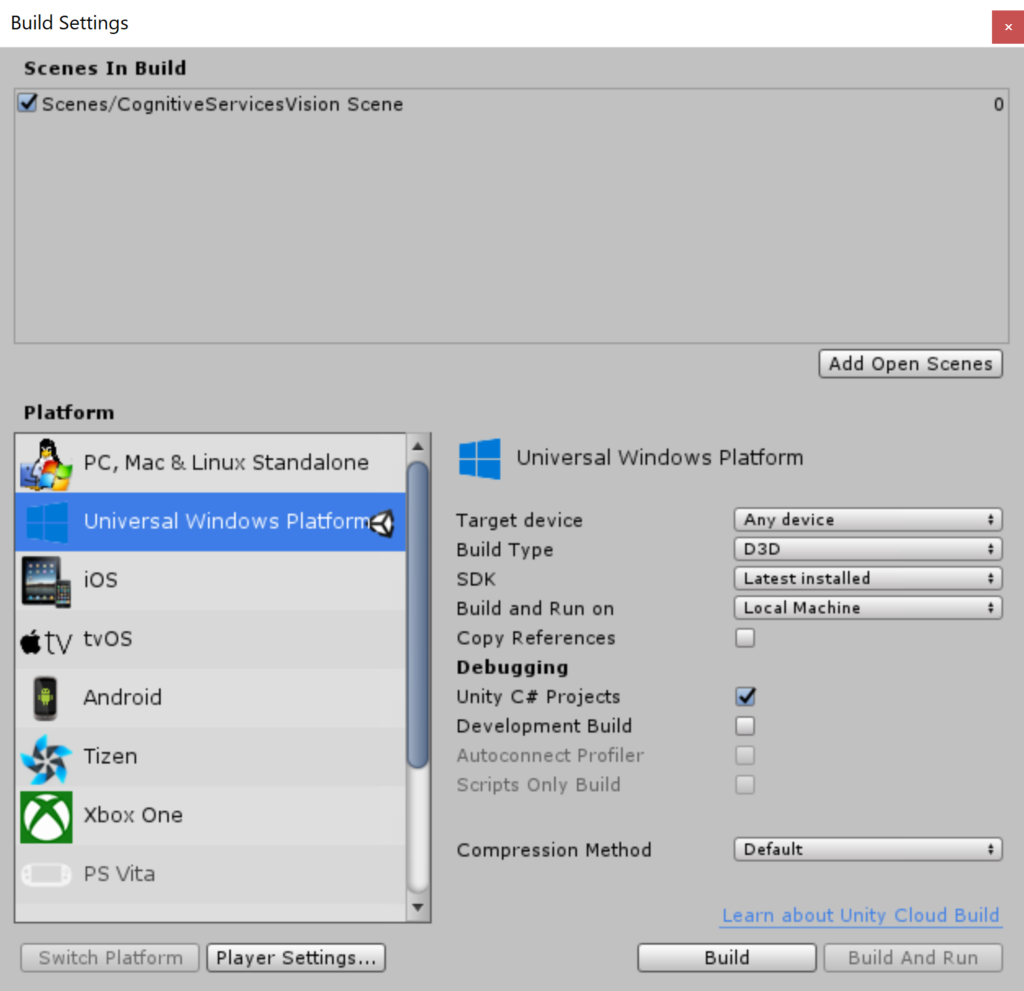

I was then able to generate the final package using Unity specifying the starting scene:

And then I deployed the solution to the HoloLens device and started extracting and categorising visual data using HoloLens, Camera, Speech and the Cognitive Services Computer Vision APIs.

And then I deployed the solution to the HoloLens device and started extracting and categorising visual data using HoloLens, Camera, Speech and the Cognitive Services Computer Vision APIs.

Conclusions

The combination of Mixed Reality and Cognitive Services opens a new world of experiences combining the capabilities of HoloLens and all the power of the intelligent cloud. In this article, I’ve analysed the Computer Vision APIs, but a similar approach could be applied to augment Windows Mixed Reality apps and enrich them with the AI platform https://www.microsoft.com/en-gb/ai.

The source code for this article is available on GitHub: https://github.com/davidezordan/CognitiveServicesSamples